Hello everyone, in this blog post I’m going to discuss my experiences and our work here at Spritle with, what’s considered the next wave of AI, computation on Edge AI.

Let’s open with a seemingly simple question,

What is Edge AI, why is it important and how do we use it?

Edge AI – A device running AI algorithms locally on a device. It is considered the next huge wave in AI because of the potential of these small-sized devices to solve the many problems faced in the deployment of Machine Learning algorithms.

Let’s discuss the problems faced while deploying an algorithm so that we’ll have a better understanding of the huge problems these small devices address.

Pre – Edge AI solutions for deploying AI algorithms

Before the advent of these efficient and powerful credit-card-sized computers, there were two popular ways of deploying Machine Learning Algorithms

1. Cloud Services

Here, we upload the data which we want to process from the computer to the cloud platform using an API service (like REST architecture) to obtain the output from our algorithms.

This method requires an internet connection to pass data between the server and our client.

Like everything, this has many advantages and disadvantages.

Advantages:

- Low cost for getting started with AI as we need not invest huge amounts for infrastructure and only pay-as-we-go for the services we use.

- Reliable uptime, as using this method ensures we have greater uptime and reliable APIs. The developers need to concentrate only on the core ML algorithms and the cloud services take care of load management, latency, and hardware.

- Easy updates are possible, thanks to DevOps

Disadvantages:

- Internet speed, it greatly affects the speed of the application. For applications that require a very high FPS, it becomes a major bottleneck.

- Security can become a concern when we pass data that can be sensitive in nature to be processed in other computers

2. Inhouse Infrastructure

We buy our own GPUs and CPUs and make the connections and can even use load balancers for efficient utilization of our resources

Let’s discuss the advantages and disadvantages

Advantages:

- Secure as the data can be transferred over a LAN that need not be exposed to the outside world

- Full freedom for customization to meet our specific needs.

- Faster processing speed due to less latency and time for data transfer

Disadvantages:

- The huge cost of investment

Consider a scenario where we need a system to run Facial Recognition and other biometric verifications to allow only authorized personnel into the building at the start of the day, in this scenario the system needs to be both fast and secure

But one observation is that the system is at peak usage during morning hours, probably for one hour, which means that for the rest of the day these may remain inactive, especially if it is not a tech firm.

- The cost of upgrading and replacement

Well, upgrades and updates are the two ever-changing constants in the software industry.

If there is a new type of architecture or new software that is not compatible with the systems we have in place it might lead to our solutions being at a subpar level to other solutions.

The cost of repair if there is any failure, becomes a huge expense too

The saviour – Edge AI

Now the problems listed above are addressed by these devices.

Advantages:

- Low cost of investment

- Less time from development to deployment

- Easy prototyping

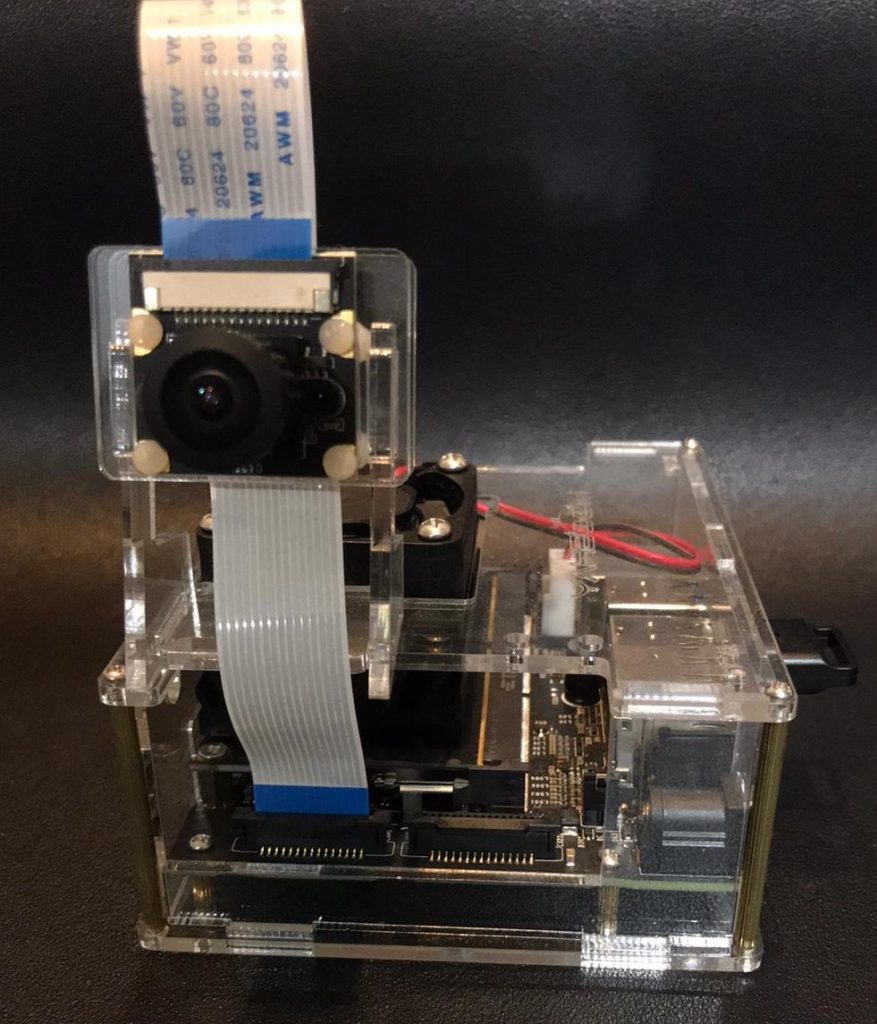

Inference on Jetson Nano

At Spritle, we were using a YoloV3 model for one of our object detection tasks.

We wanted to make use of the onboard GPU for inference.

For achieving this task there are a few steps we followed:

Step-1: Install the Jetson Nano Developer Kit SD card Image https://www.pyimagesearch.com/2019/05/06/getting-started-with-the-nvidia-jetson-nano/

Step-2: Compile AlexeyAB’s Darknet with libso parameter turned on. https://github.com/AlexeyAB/darknet#how-to-compile-on-linux-using-make

Step-3: Make sure you copy and place this libdarknet.so file someplace safe

Step-4: Modify and use the darkent.py file for running the inference. https://github.com/AlexeyAB/darknet/blob/master/darknet.py

Step-5: Done

Sounds simple, doesn’t it?

With the above setup, we were able to achieve roughly 14fps for a custom trained tiny-yolov3 model on Jetson Nano

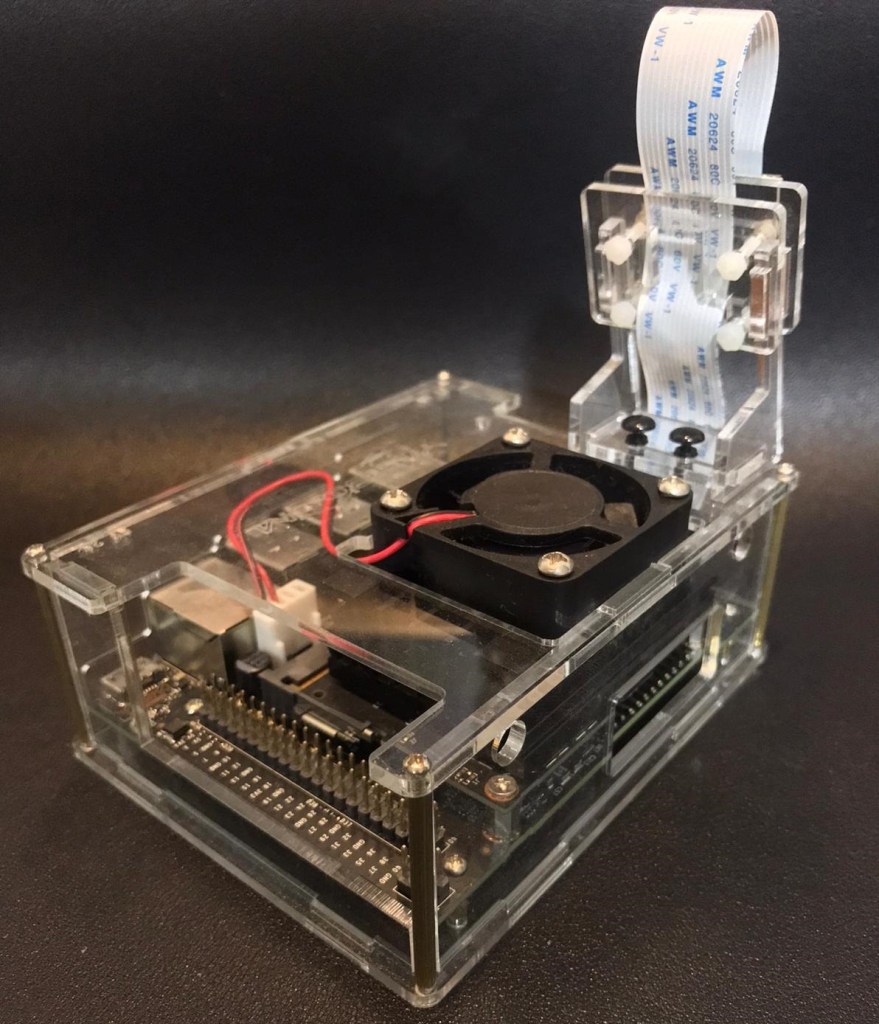

It should be noted that when it is run for a long time, there is a slight heating issue we noticed with our device, so we had to attach an onboard cooling fan to fix the problem

So that was our experience with these small, powerful cutting-edge devices of tomorrow. Please share your experiences in the comment section below

Please feel free to drop your questions in the comment section. Thank you

References and Further Reading

Below are some of the articles that helped me in deploying the model